Blog Post

Assessing How We Grade Assessments

By Dean Ballard, Director of Mathematics, CORE

When I started teaching math 35 years ago, chapter tests were easy to grade. Typically, a test had 20–25 problems and I simply made each problem worth four or five points, whatever was needed to make the total 100 points. In many math curricula today, there may be only 5–10 problems total on a chapter, module, or unit assessment. Suppose I have a test with nine problems. If I assign equal weight to each of these problems, and a student gets six out of nine problems correct, this student would have a score of 67% correct. This often translates into a grade of D. Yet six out of nine correct may warrant a better grade when we consider the actual knowledge displayed by correctly answering those six problems.

When I started teaching math 35 years ago, chapter tests were easy to grade. Typically, a test had 20–25 problems and I simply made each problem worth four or five points, whatever was needed to make the total 100 points. In many math curricula today, there may be only 5–10 problems total on a chapter, module, or unit assessment. Suppose I have a test with nine problems. If I assign equal weight to each of these problems, and a student gets six out of nine problems correct, this student would have a score of 67% correct. This often translates into a grade of D. Yet six out of nine correct may warrant a better grade when we consider the actual knowledge displayed by correctly answering those six problems.

Many teachers use a standard percent to grade relationship when determining grades for students: 90–100% is an A, 80–89% is a B, 70–79% is a C, 60–69% is a D, and below 60% is an F. Ten years into my teaching career two parts of this grading system started to strike me as questionable. First, even if a student correctly answers 59% of the problems deemed appropriate to include on the assessment, that student is considered failing. Second, since I’m the one determining the weight of each problem on an assessment, what does the percent correct, or percent of total points, really mean?

Suppose that on my nine-problem assessment mentioned above, problems 1–6 are low-level-fact, procedural, or basic-conceptual-knowledge short-answer-type problems. I think of these as the types of problems I might include on exit tickets for lessons. Problems 7–9 include deeper-level questions, increasing in rigor with each problem. Each of these three problems has multiple parts and requires both a solution and a justification for the solution.

I used to look at this type of assessment and believed that problems 1–6 should be assigned fewer points for each correct answer than problems 7–9 because each of these first six problems was easier to solve and revealed less depth of knowledge. I might have assigned each of the first six problems five points apiece, and each of the last three problems 10 points apiece. Thus, this nine-problem assessment would have had a maximum score of 60 points. If a student answered correctly all of the first six problems but answered none of the last three problems correctly, the student received 30 out of 60 points for a final percent correct on the assessment of 50%. This student was considered failing. I started to ask myself, “Is this student’s performance on the assessment really at a level that I consider below proficient?” To answer this question, I needed to start assessing the assessment and assessing how I graded the assessment.

I began by mapping the assessment problems according to what it meant for a student to demonstrate minimal proficiency. I first determined which problems a student should be able to answer correctly if that student was at the lower end of proficiency on the key material in the unit being assessed. Another way I thought of this was, which problems provide evidence of a minimal level of students’ preparedness to take on the next lesson? This was my baseline for proficiency or at least a C- grade on the assessment. I started to base proficiency on the content of the problems answered correctly rather than on the number of problems answered correctly.

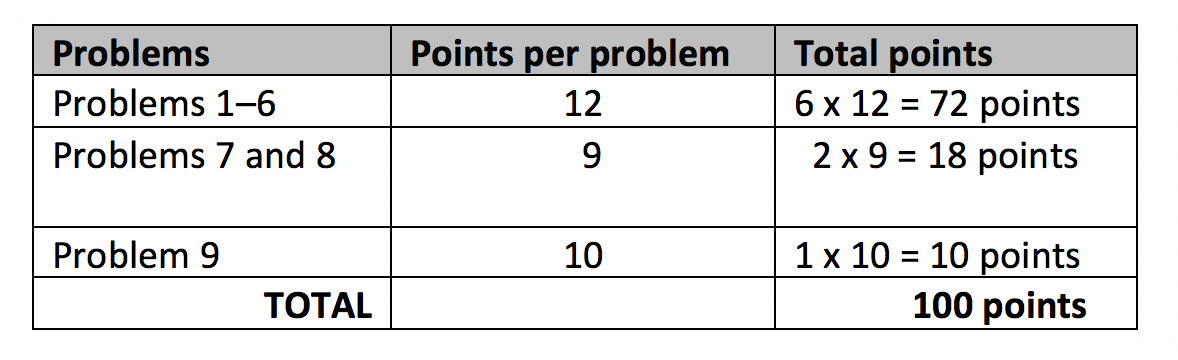

With this reasoning in mind I would score the nine-problem assessment differently than previously described. I would consider the six basic-level problems as the base proficiency level. Sticking with the idea of at least a 70% for a grade of C- or above, I would assign enough points to these first six problems to make them worth 70–75% of the points on the assessment. To make things easy on myself, I simply make the total points on the assessment add up to 100 so that the points translate directly into a percent for a grade. I assign 12 points to each of the six basic problems. The total for all six problems is then 72 points, exactly in the range I want. Next, I look at what would distinguish a C grade, a B grade, and an A grade in terms of student responses for the remaining three problems. I might see that problem 9 is what I consider A-level work. Therefore, I make this problem worth 10 points. Then a student cannot get an A without getting this last problem at least partially correct. That leaves 18 points to distribute between the remaining two problems (problems 7 and 8). I simply assign each problem nine points. Below is a scoring rubric for this assessment.

I have used this system over the last 25 years both as a teacher and as a coach sharing grading ideas with teachers. This system answered my two original questions. A score of 59% on the assessment does warrant an F because a student with this score would have failed to show evidence of the basic knowledge assessed on the test. I also know exactly what the percentages mean on my assessments because I have analyzed the problems to determine which problems need to be answered correctly to achieve each percentage level. The percentages align with students showing evidence of what they know.

Students should be graded on what they know, the actual content of their knowledge, not the points that happen to work out based on past test-scoring practices. This is especially true today when assessments have fewer overall problems that also vary dramatically in the depth of knowledge required by students to answer each problem. Assess your own assessment-grading practices and determine a system that provides your students grades that reflect the knowledge they show evidence of mastering on your assessments.